Optimise Your Research Workflow using GenAI, Part 2: Agents

Continuing the exploration of how Generative AI (GenAI) tools can enhance research workflows. In Part 1, we discussed No-Code GenAI tools that streamline research processes like chatGPT & Perplexity. Here in Part 2, we focus on “agentic workflows” Combining tools and agents to automate complex workflows, and interact with large data sets and multiple sources, to enhance productivity through innovative capabilities.

Photo by Sara Kurfeß on Unsplash

Research agents

First lets look at some examples of of agents

What is an AI agent?

An AI agent is a system that uses an LLM to decide the control flow of an application.

Examples

Coding Assistants:

These agents help developers generate code, debug errors, suggest algorithms, and automate documentation, streamlining workflows in software development.

Legal Assistants:

Automating document reviews, summarizing cases, and drafting contracts, these agents enhance accuracy and reduce workload in compliance-heavy industries

Customer Service Agents:

Equipped with multi-modal capabilities, these agents handle customer queries via text, voice, and even images. They personalize responses, resolve issues efficiently, and escalate complex problems to human representatives, improving overall customer satisfaction

Enterprise Agents:

Used in businesses to answer layered questions by decomposing tasks and retrieving data with tools like retrieval-augmented generation (RAG). Often taking on the workflows of tasks and applying some reasoning or decision making to the LLM.

So let’s take a look at Research Agents;

Example: GPT researcher

A popular open-source tool, GPT Researcher, expands on users’ initial queries by generating and asking multiple questions for a comprehensive report.

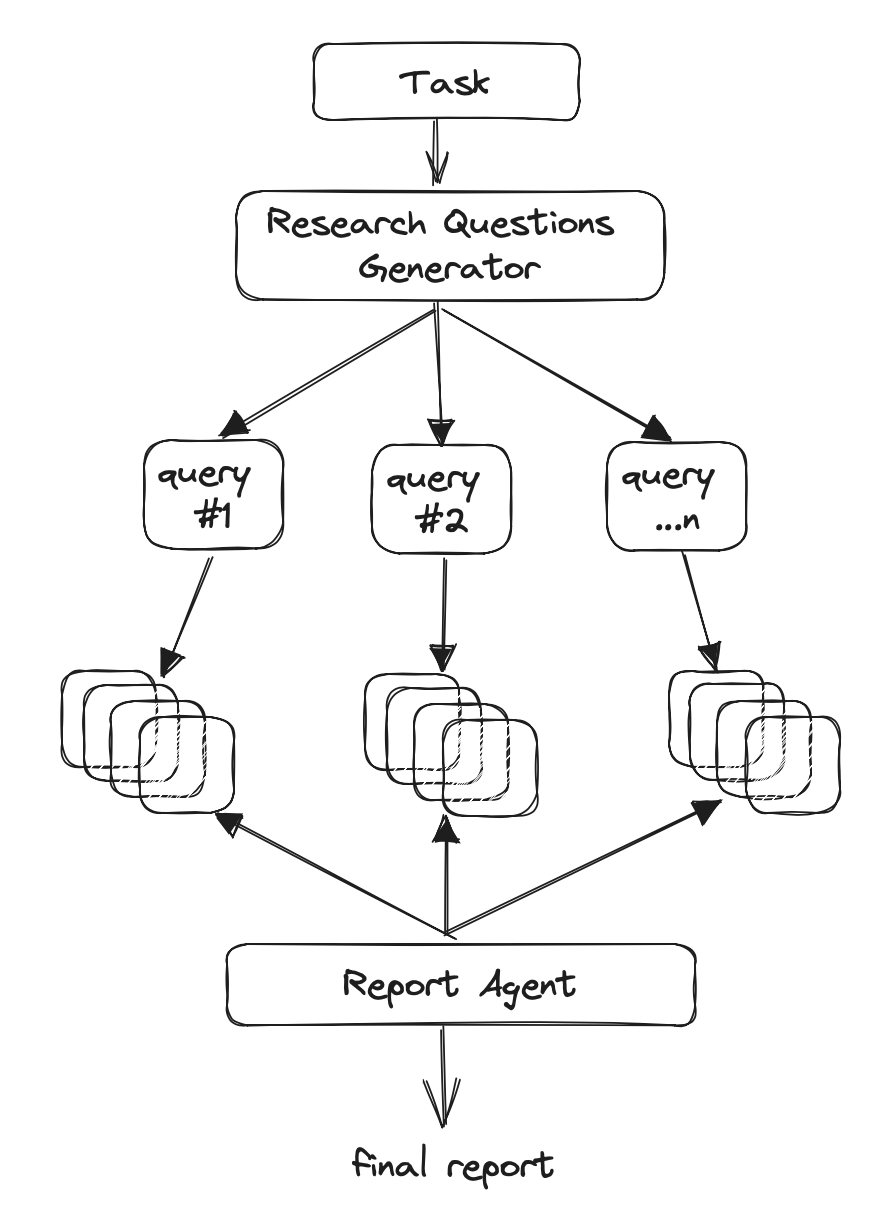

To conduct research efficiently, we follow a multi-agent approach using GPT Research. The process begins with outlining research questions to form an objective opinion about a task. For each question, a crawler agent gathers relevant online resources. The system then filters and summarizes information from these sources, retaining only relevant content. Finally, it aggregates the summaries into a comprehensive research report.

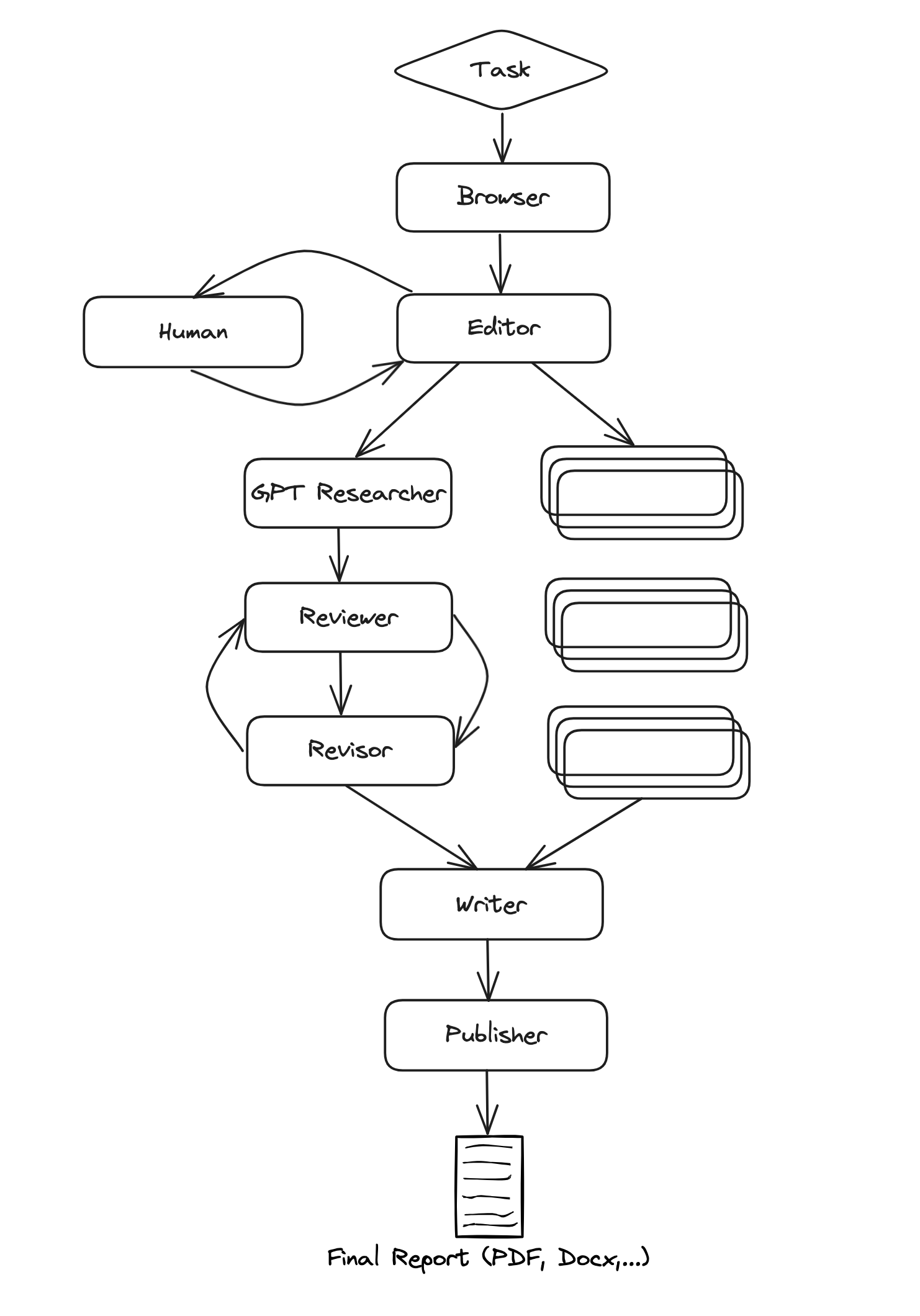

This workflow leverages the collaborative efforts of seven specialized AI agents:

- Human: Oversees the process and provides feedback.

- Chief Editor: Manages the research process and coordinates all agents using Langgraph.

- Researcher (gpt-researcher): Conducts in-depth investigations on the topic.

- Editor: Plans the research outline and structure.

- Reviewer: Validates the research for correctness based on specific criteria.

- Revisor: Refines results based on reviewer feedback.

- Writer: Compiles and writes the final report.

- Publisher: Publishes the report in various formats.

This structured workflow ensures accuracy, relevance, and high-quality outputs. You can learn more about implementing these systems at GPT Research.

GPT Researcher breaks down reports into main topics and subtopics, using query expansion to explore each subtopic further. It performs separate searches against the web or an indexed knowledge base to provide depth. The tool excels at:

- Breaks down complex topics into manageable subtopics

- Conducts in-depth research on each subtopic

- Generates a comprehensive report with an introduction, table of contents, and body

- Avoids redundancy by tracking previously written content

- Supports asynchronous operations for improved performance

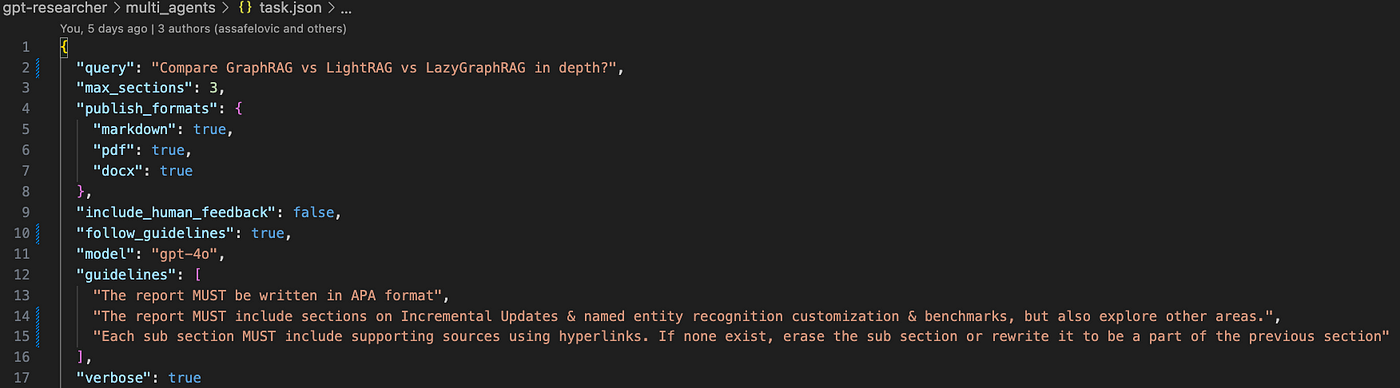

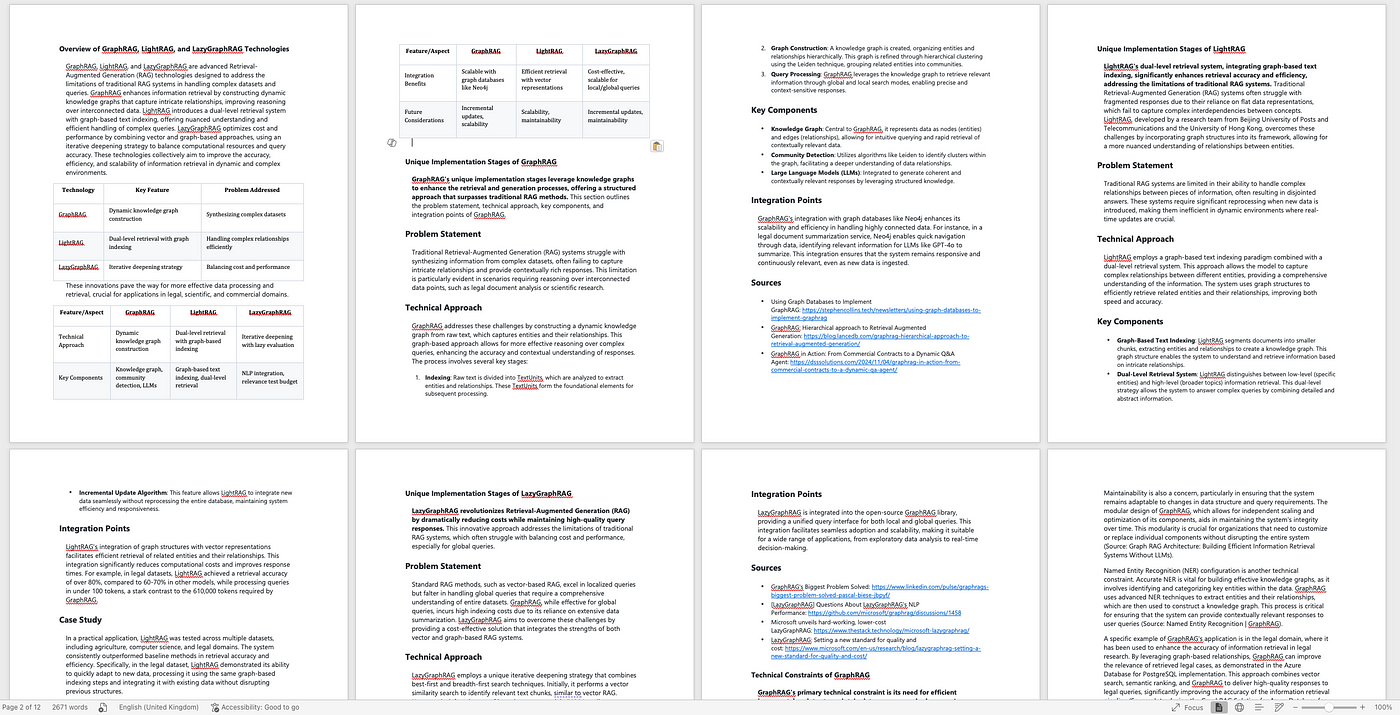

Continuing with the research topic, we use a task definition to set a similar query;

“search the internet and compare GraphRAG vs LightRAG vs LazyGraphRAG, in depth? Include sections on Incremental Updates & Named entity recognition customisation, but explore other areas”

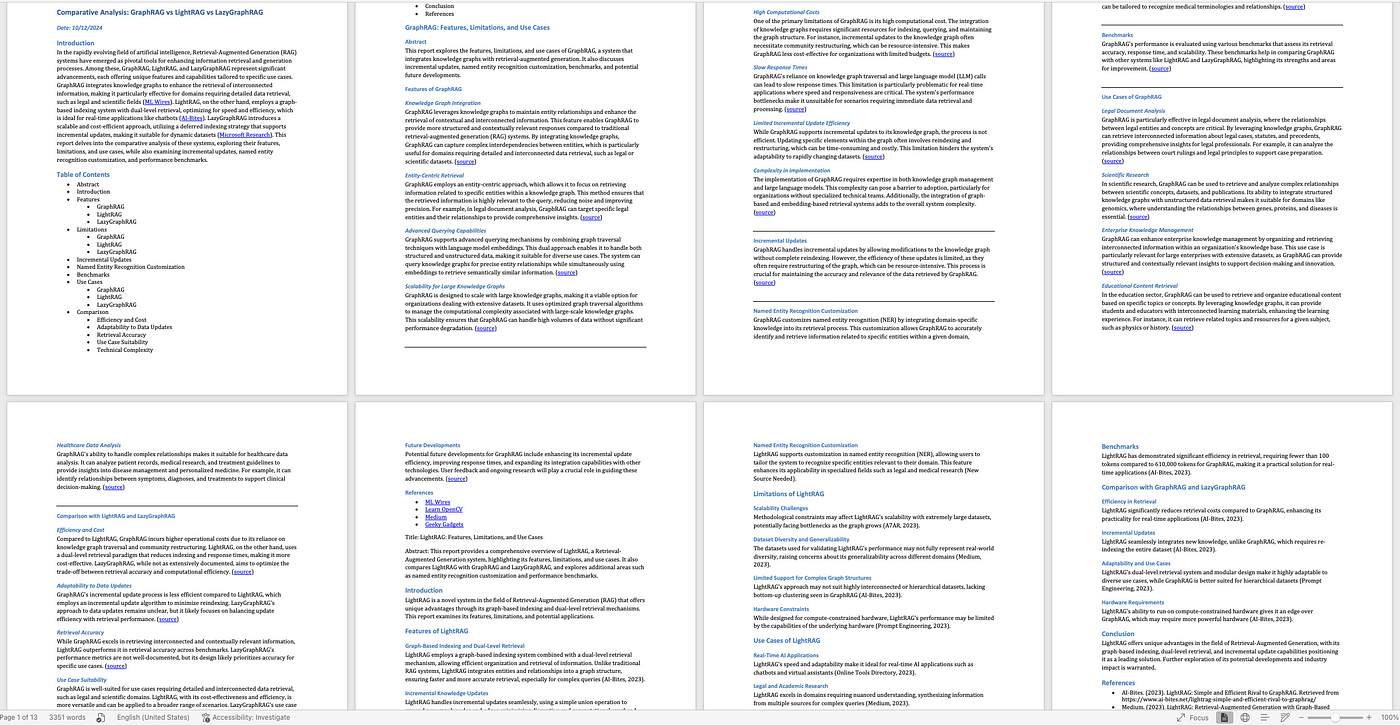

The quality and comprehensiveness of a report produced by GPT Researcher are closely linked to the data gathered during the research process. It effectively defines report topics and selects subtopics for deeper exploration while providing citations for validation and on the face of it seems to have done a great job.

Its important to point out this a great job for an intern, there is still a lot of mistakes are or hallucinations that need to be checked.

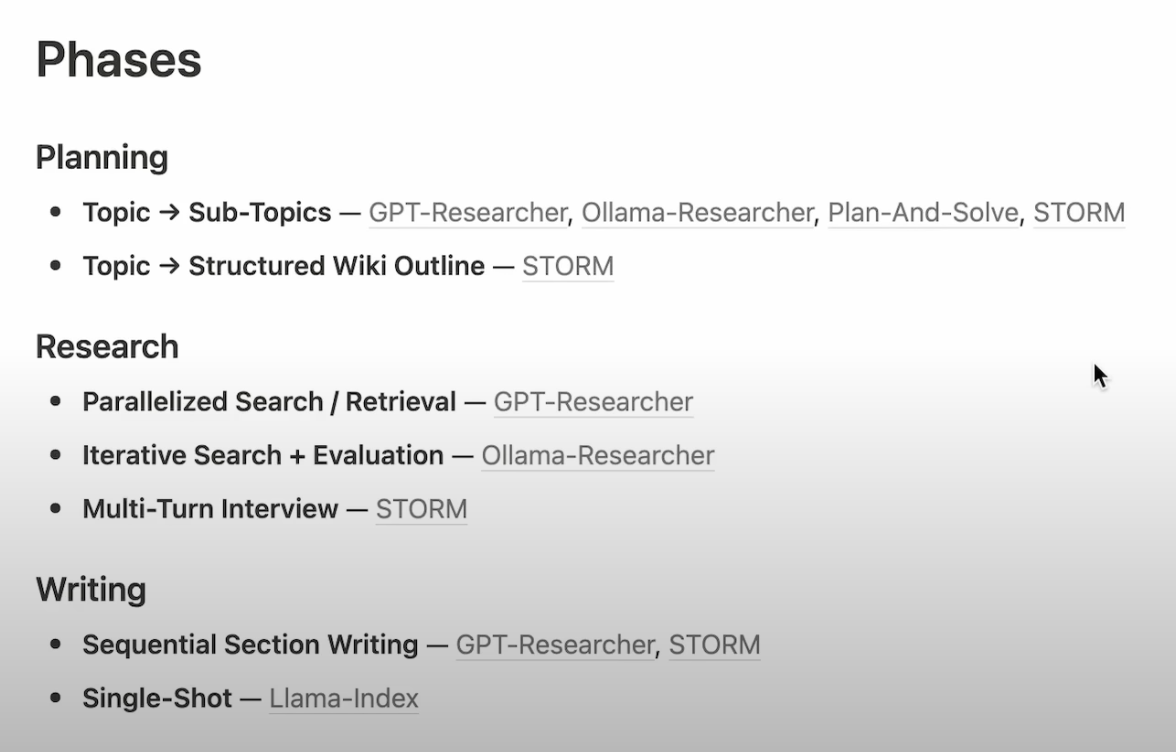

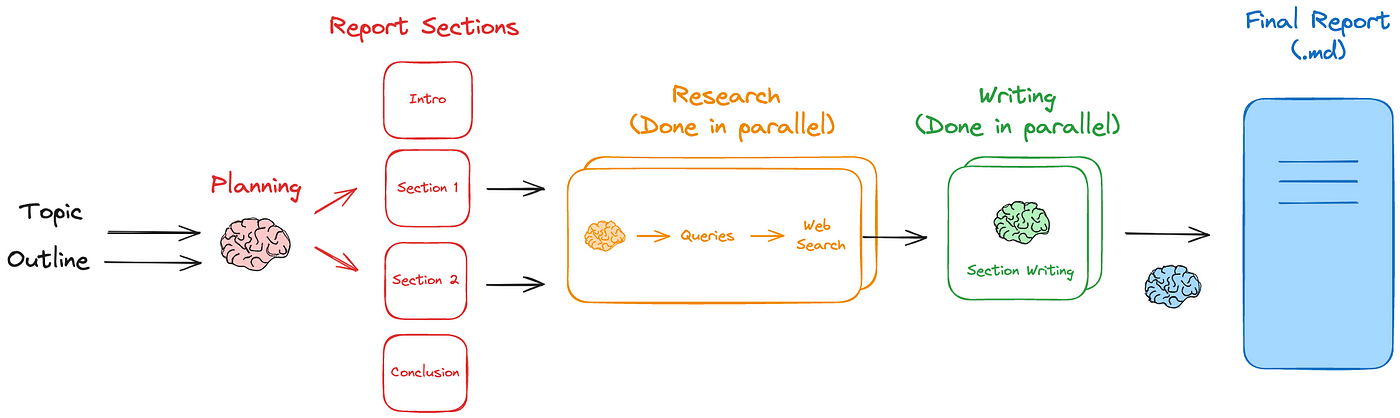

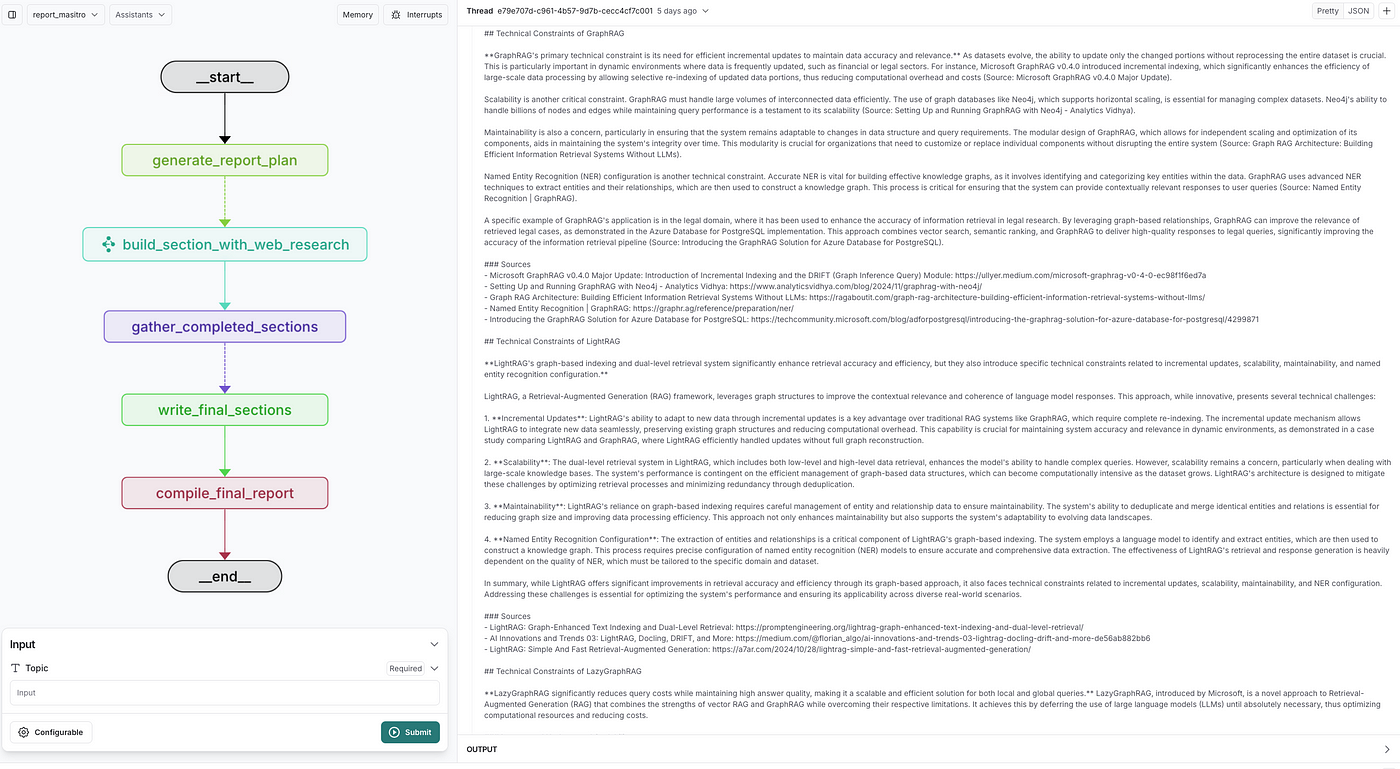

Example: LangGraph Report mAIstro

While writing this blog post, the Langchain team released an informative video covering various agent approaches, exploring common planning, research, and writing options being explored as of December 2024.

See the full video: https://www.youtube.com/watch?v=wSxZ7yFbbas&t=11s

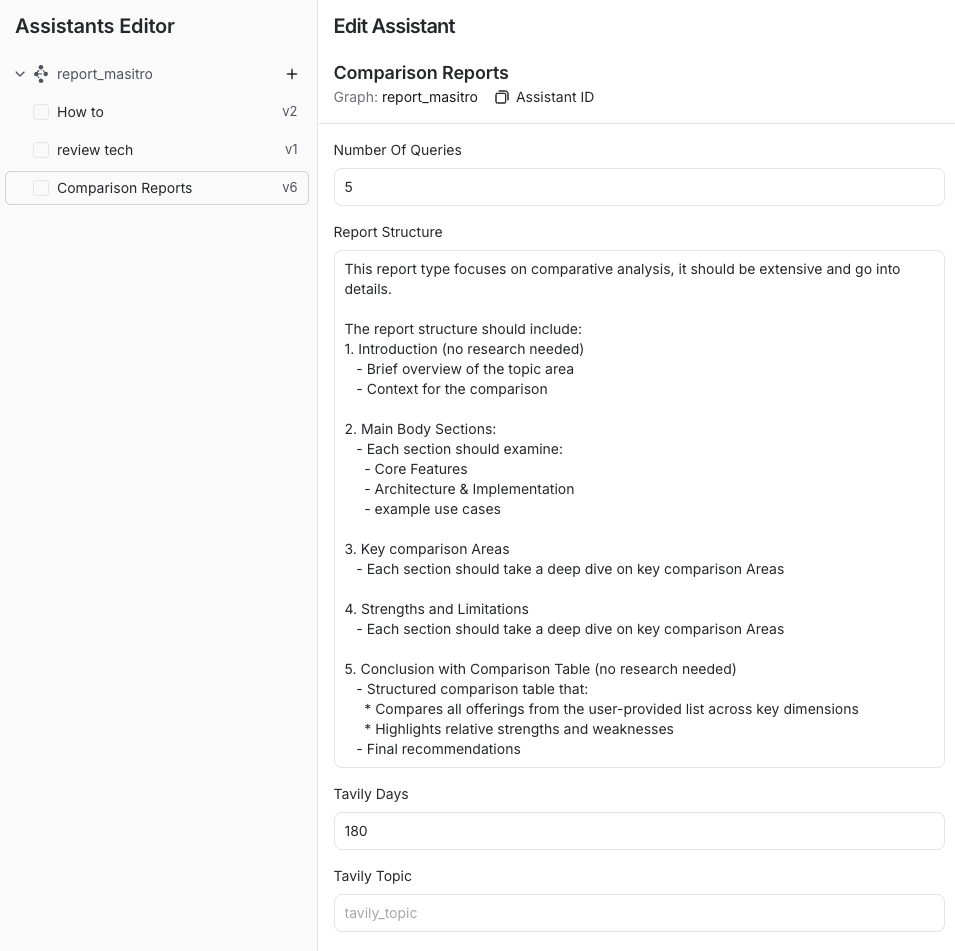

I set up a similar test using the Agent researcher from the Langchain team. By adjusting prompts within the code templates (e.g., section word length), I produced comparable in-depth reports. This agent allows easy configuration of report types using prompt engineering and offers several template examples.

- Follows a report structure template that is set up at the start very well.

- Each section conducts in depth research via a web search or RAG search.

- You can control the intro and conclusion with as many sections as you like.

- It doesn’t appear to do tracking of previously written content from each section like GPT Researcher.

- Uncomplex, simple code structure to easily playground with and make changes, could see this being a very useful research agent.

- Supports asynchronous research for each section for good performance

https://www.youtube.com/watch?v=wSxZ7yFbbas&t=11s

This agent, however, doesn’t track previously written content like GPT Researcher does. Its simple code structure facilitates experimentation and changes, making it easy to iterate and add customisation.

Running a report directly in Langgraph Studio shows each section following template instructions, with intros and conclusions integrating all sections. While this method may not suit those requiring cross-referencing between sections, customization can address this need.

The final report is similar depth to GPT researcher but the control over report design and structure is useful, for quickly diving into to different research areas customised to my style.

The results are well-structured and readable reports gathered by the LLM interns. Adding inline citations, as GPT Researcher does, would enhance validation.

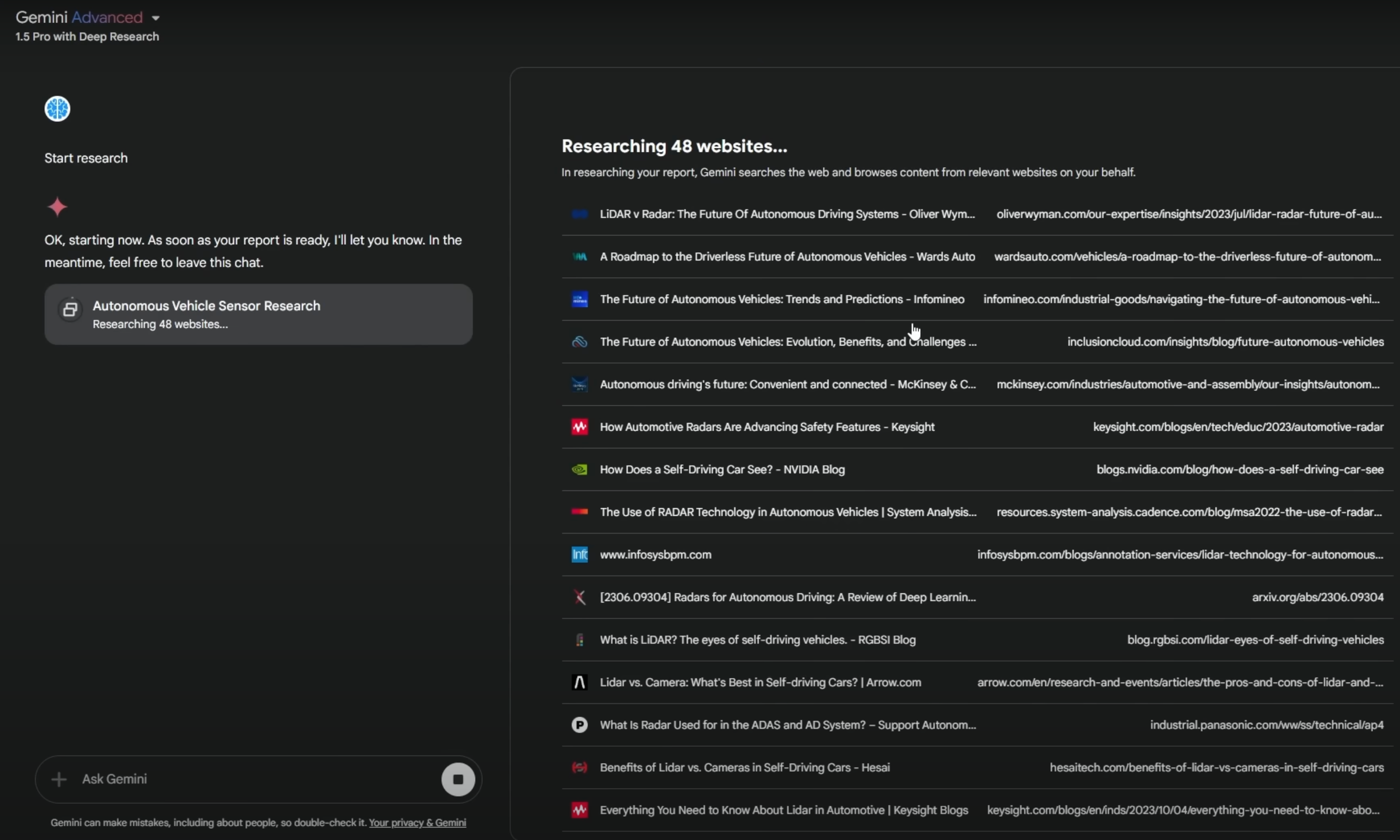

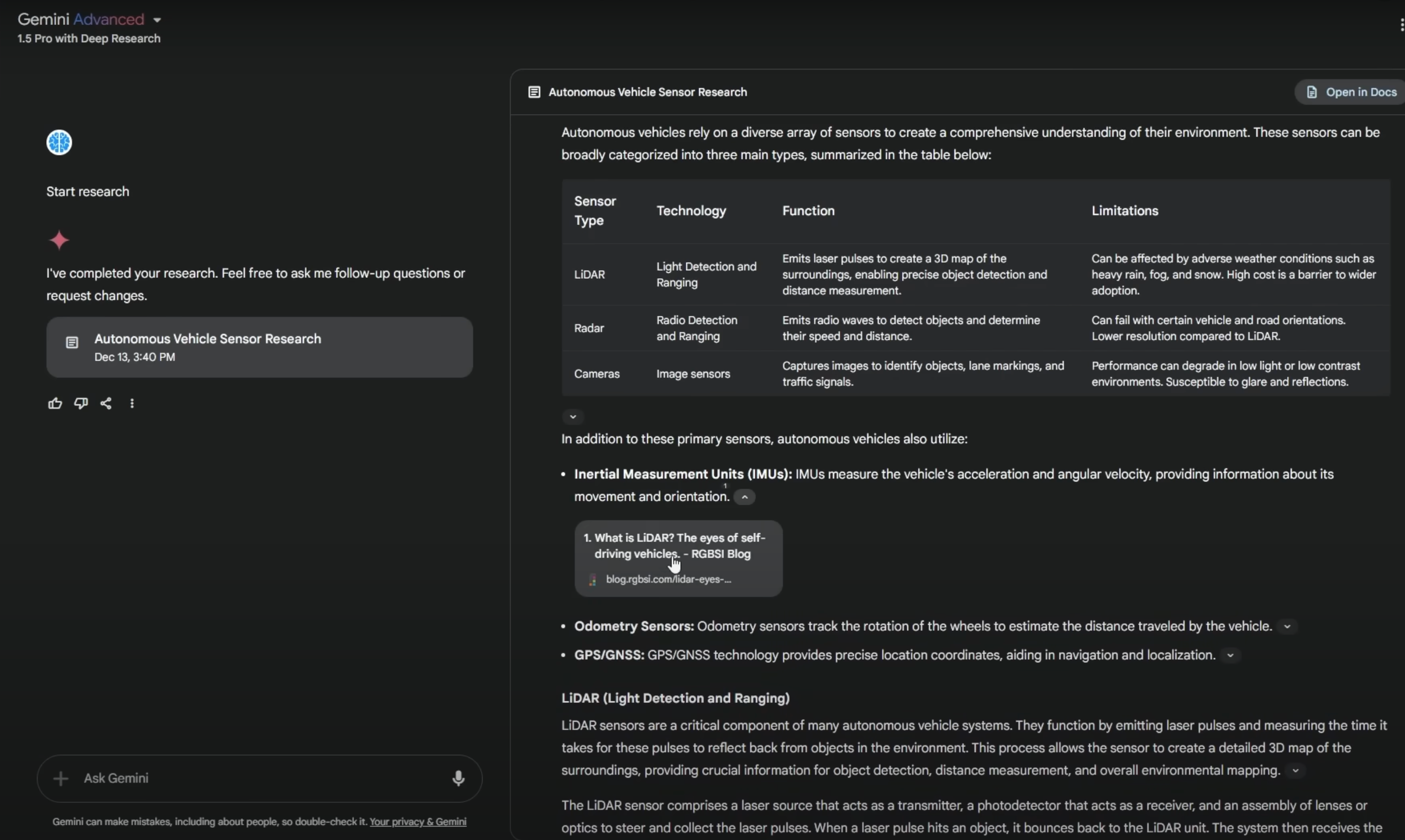

Example: Gemini Advanced

In the GenAI world, it’s currently “🚢 - Shipmas - 🎄” with Google Gemini releasing a similar agentic workflow product. It looks similar to the open source versions GPT Researcher and Report mAIstro explored earlier.

There’s a promising video demonstrating its capabilities, I will continue to add my thoughts on these products evolve, in future post. For now check.

https://www.youtube.com/watch?v=_mpD0dDL66g

The Missing Feature - QA Evaluation

I have explored several research agents, and while they excel at swiftly researching topics and gathering data, ensuring the quality of gathered data remains a concern. These intern agent generated reports often suffer due to less relevant sources selected for context. As usual garbage in garbage out, and the current agents are not addressing this fully. GPT Researcher’s hybrid approach partially addresses this by allowing the combination of search results with personal documents but context is potentially poisoned by bad web search data.

Addressing this is key to generating quality reports. There are ways to QA and triage the search results automatically using LLM as a judge evaluators, which should be explored.

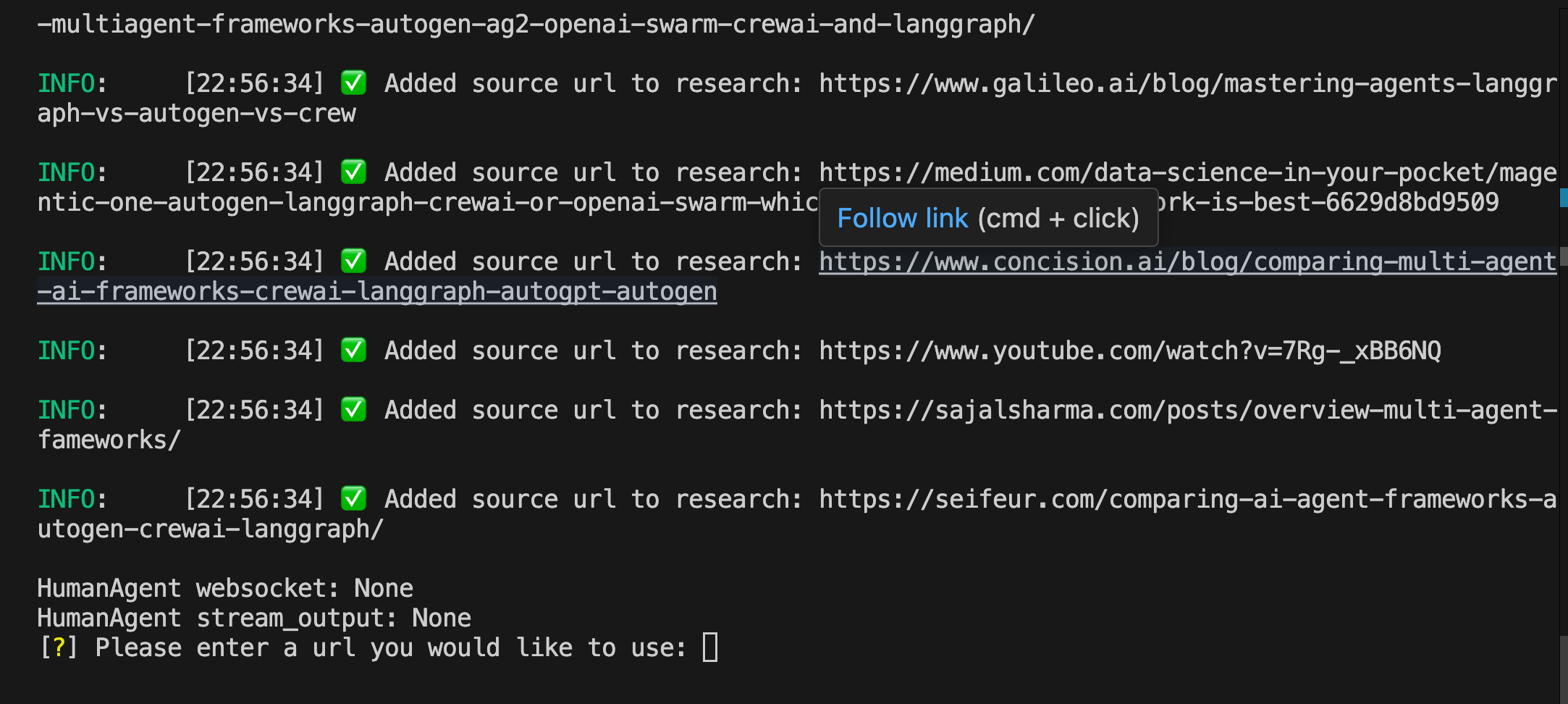

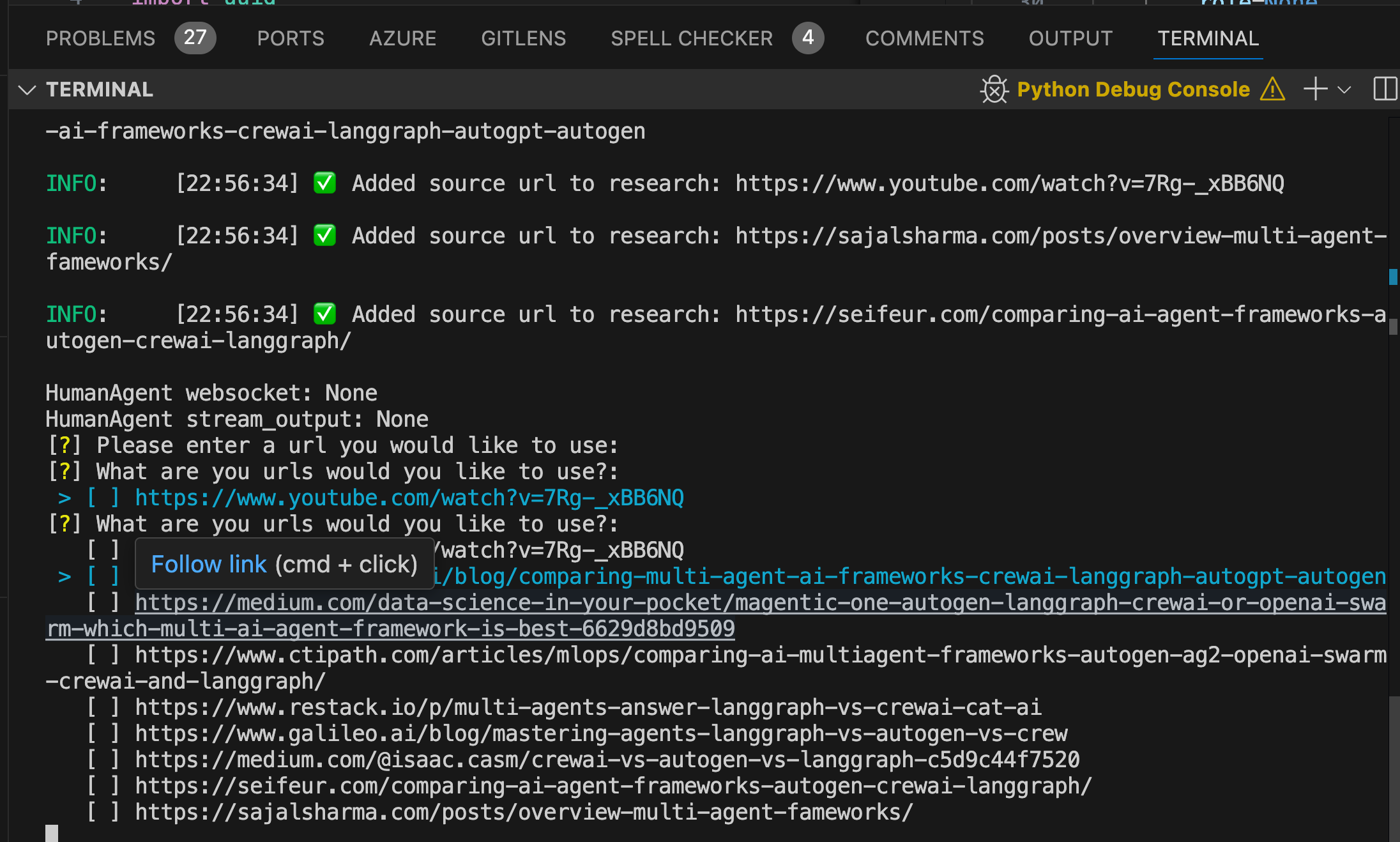

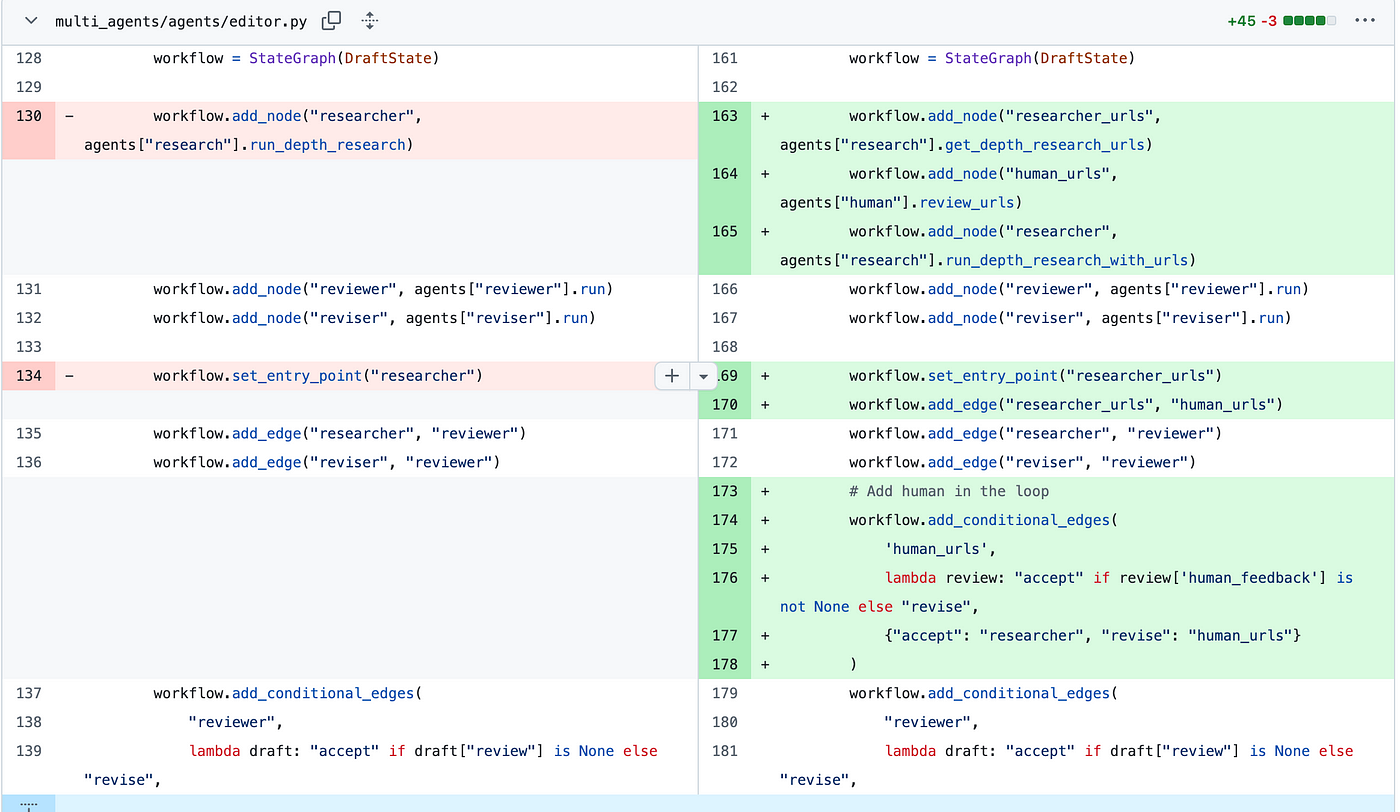

For now to customise GPT Researcher, I’ve introduced features to triage sources before including them in reports. A human in the loop aspect where, users can review URLs and add useful links, improving report quality and relevance. This could also be applied to other agents like Report mAIstro., below is my example applied to GPT Researcher, running via CLI.

- First you can add sites you want included in the report.

- Next you can triage all the sites gathered at each stage of the research process included in the report.

A fairly simple update to the graph flow provided by langgraph. These enhancements introduce human input into the loop, allowing users to curate resources, review URLs for each section, and incorporate their own links for a more accurate and reliable research process.

The end result is report generation that closer resembles how perplexity lets refine the sources on a web search result like we saw in part 1. Curation with HITL can slow the generation of the report down but improve the quality output. Next we would want to automate this to speed up the refinement process and allow the user to refine once generated.

Example: https://notebooklm.google.com/notebook/edb152b3-a673-4133-aed7-038c1c3cd8ff/audio