Optimise Your Research Workflow using GenAI, Part 1: Search (No-Code)

Imagine embarking on a research project where the tedious tasks of data collection and preliminary analysis are seamlessly handled by an intelligent assistant. Picture having the ability to quickly uncover trends and insights from vast amounts of information. Today, we can “Use Gen AI to Optimize Your Research Workflow.” I’m sharing some no-code examples and actionable tips on how you can make your research more effective, insightful, and rewarding using Gen AI.

My work focuses on the innovation cycle, where staying ahead of the curve isn’t just beneficial; it’s essential. My role involves researching the latest technology trends to identify opportunities that can be quickly developed into lean prototypes. However, true innovation requires more than just building something minimal; it demands integrating research with practical solutions that deliver business value. This requires the agility to pivot and scale using different approaches and technologies as projects evolve, with research being an ever-present part of the process, varying from broad understanding to deep dives into new subject areas.

By integrating Gen AI into my research workflow, I’ve been able to streamline processes by conducting quick analyses, investigating trends, and swiftly adapting to new information. In this post, I’ll explore how leveraging Gen AI can optimize your research journey, enabling you to innovate more effectively.

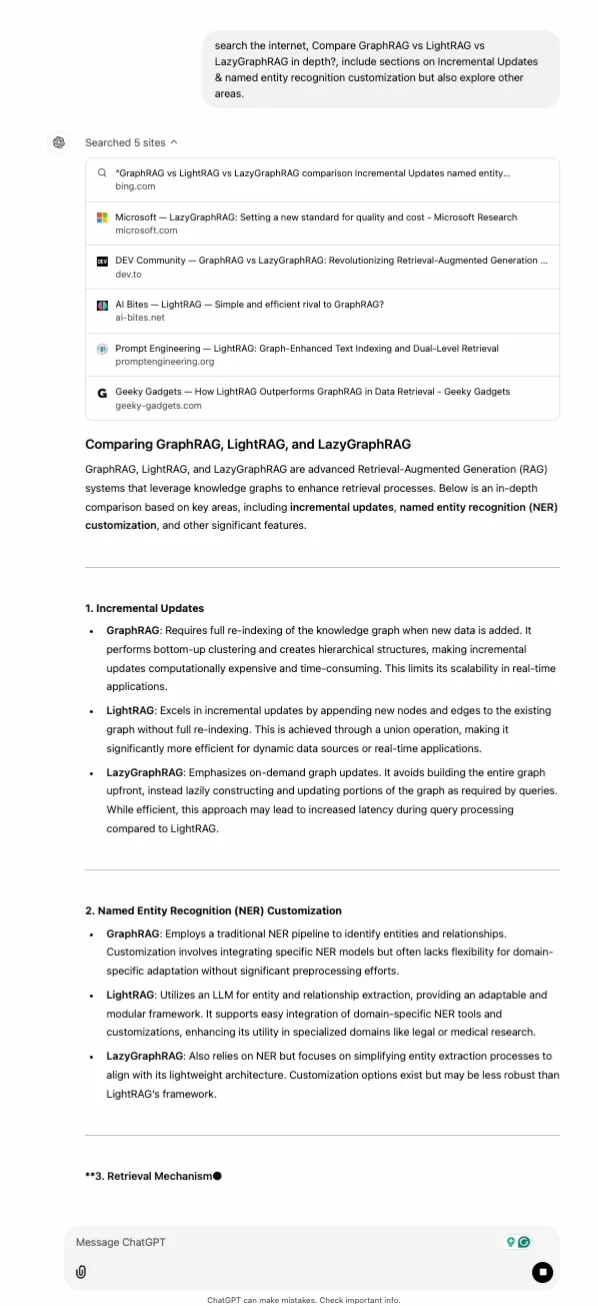

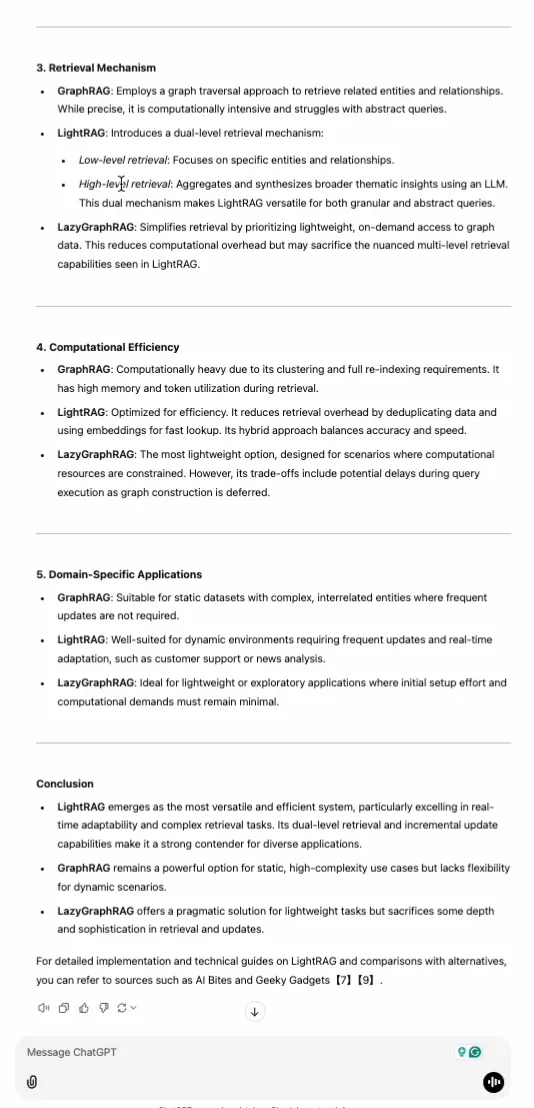

Using chat GPT for research

Leading providers have integrated an internet search tool into the chat interface, allowing us to pick a modern and complex topic for research. We can instruct the chat interface to:

“search the internet and compare GraphRAG vs LightRAG vs LazyGraphRAG, in depth? Include sections on Incremental Updates & Named entity recognition customisation, but explore other areas”

We’re using modern large language models (LLMs) like GPT-4, where the data they were trained on has cut-off dates no more than 12 months old. This means the LLM may have some knowledge of these frameworks, but it’s likely not up to date, so we want to augment the search with relatively recent content.

The subject: GraphRAG can address some limitations of RAG, improving accuracy, contextual understanding, and relevance in response generation, but it currently faces scalability and maintainability challenges. Understanding whether it is close to being production-ready and the requirements to maintain it long-term is useful. In this case, the model correctly highlighted some of the constraints I wanted it to compare, such as Incremental Updates and customization of the Named Entity Recognition module.

You can get fairly good results using Open AI paired with a web search tool. However, ChatGPT, at the moment, has inconsistent citations, making it difficult to validate the answers easily.

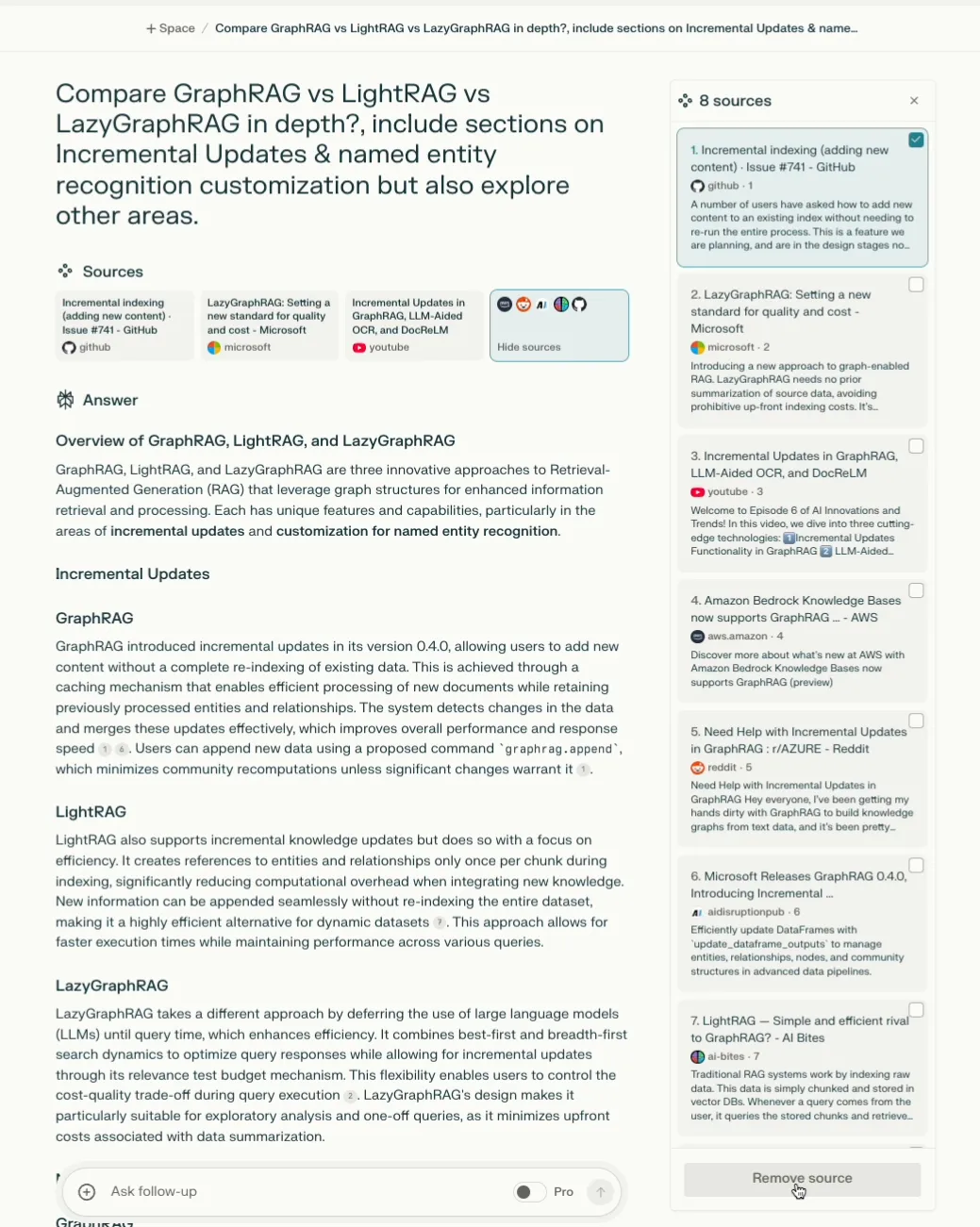

Using Perplexity for Research

Another useful tool for research is Perplexity, which provides a detailed view of augmented research and summaries. Notable differences include broader resources and configurations offered by Perplexity, which include YouTube sources and the ability to remove sources that you deem irrelevant, a very useful feature since bad data can affect the outcome.

Hybrid Research

Adding RAG: We may want to include our sources of data, coupled with internet sources, enabling us to ensure that some context is covered that may be missing from recent web searches or article sources.

Via Open AI

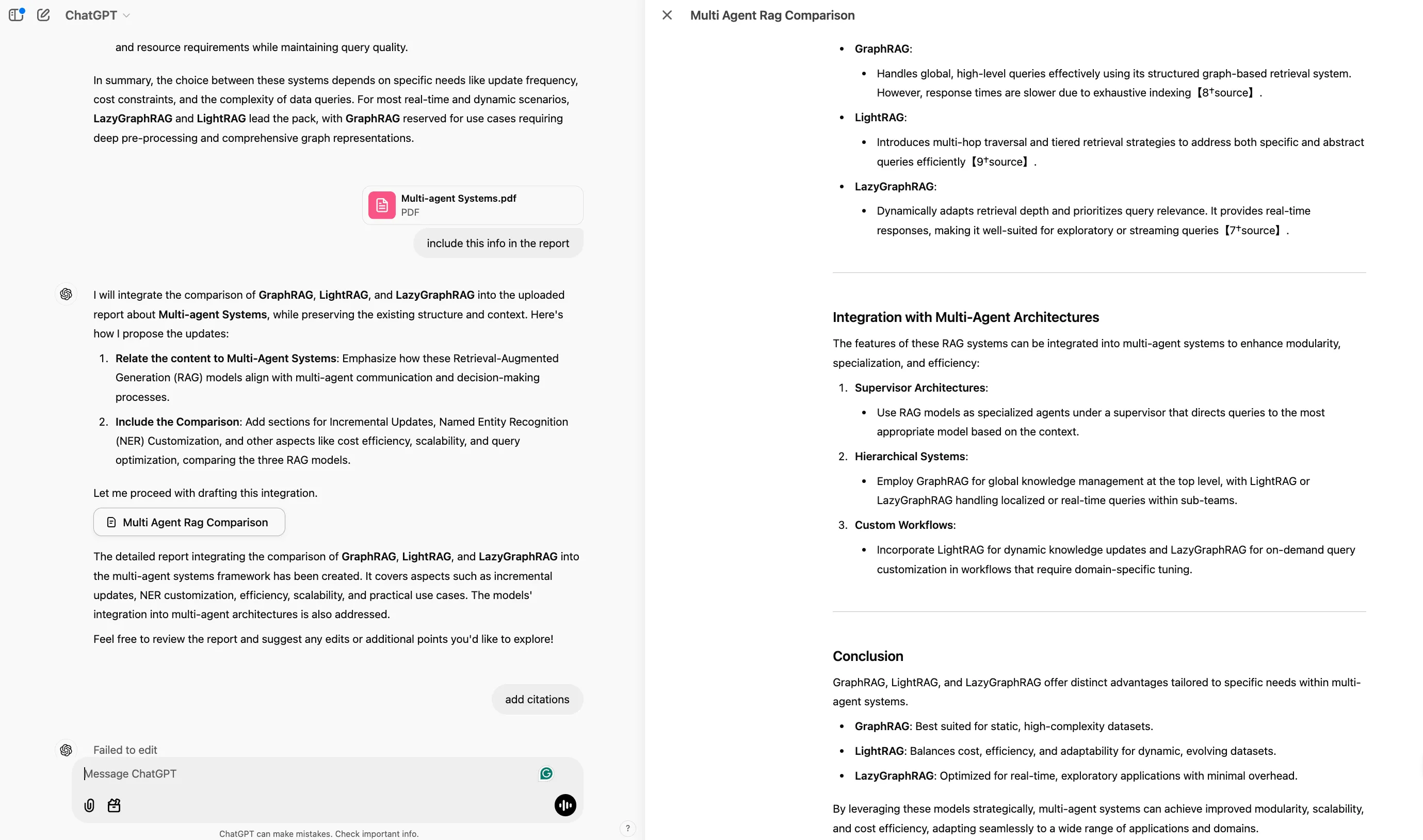

You can start to build upon the report and augment the earlier research with custom documents by uploading them. It can then reference the material either via the previous search or the uploaded documents. I did not prompt it to maintain the same report format, so it changed, which is a bit annoying, and the references are not correctly cited in the new report. With some prompt engineering, I could tweak the report to achieve the output format I prefer.

One other note is that these reports are fairly high-level comparisons, which are great, but gathering deep insights on each section would require further investigation, as the resources provided cover high-level searches based on the original search prompt:

“search the internet and compare GraphRAG vs LightRAG vs LazyGraphRAG in depth. Include sections on Incremental Updates & Named Entity Recognition customization, but explore other areas.”

This is a great start, but to take this further, I would want to conduct more detailed searches on certain sections and relate that content to the primary search.

In Part 2, we will look at where Agent workflows are taking this.